Over the past few months, we have been thinking about how Large Language Models (LLMs) could radically improve the healthcare programs that D-tree delivers, and improve the efficiency of our operations. LLMs could enable better training and support for healthcare workers, streamline administrative tasks, and be used to provide on-demand health information to clients. These improvements would enhance the quality of health services and also make healthcare more accessible and responsive to those who need it most. However, although the potential gains could be transformative, there are significant risks to mitigate. For example, the tendency for LLMs to invent fake information can have serious negative consequences in a healthcare setting.

We are therefore excited about the potential of LLMs but are mindful of the risks posed by their use. As a result, we are starting to explore the technology through a set of use cases where LLMs can add meaningful value to our work but with minimal risk. This approach is allowing us to experiment with LLMs in real-world scenarios while ensuring safety. We can observe how users engage with LLM-powered tools and gain valuable insights into the benefits, challenges, and costs involved. What we learn from these explorations, described below, will guide our long-term strategy for integrating LLM technology into our work.

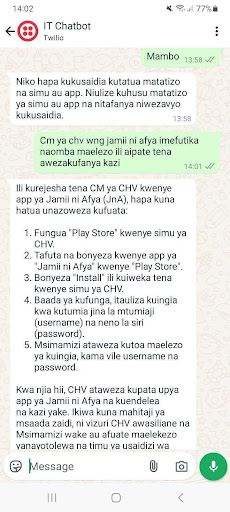

A chatbot that empowers CHWs and their supervisors to resolve technical issues with digital tools

We have just tested a Swahili-speaking WhatsApp chatbot that we created ourselves using the Open Chat Studio toolkit developed by Dimagi. The chatbot empowers community health workers (CHWs) and their supervisors in Zanzibar to independently fix technical problems that frequently occur with the phones and apps that CHWs use to conduct their work. The current process to fix these issues is for CHWs to seek support from their supervisors as a first step, and then to escalate the issue to technical program staff if necessary. The process takes a lot of time and coordination from several people which could be better spent on delivering and improving health services. We believe that a chatbot could vastly reduce the time and effort required.

Whilst testing the chatbot, we were excited to see that some CHWs and supervisors were able to interact with the chatbot to successfully resolve several issues, and that they were enthusiastic about using the tool. However, we also observed that not all users were able to formulate questions that enable the chatbot to provide a useful response, and that this was the most significant barrier for some users to be able to derive value from the chatbot. This tells us that we should provide training on how to interact effectively with the chatbot, and not assume that everyone will automatically know how to do this by themselves.

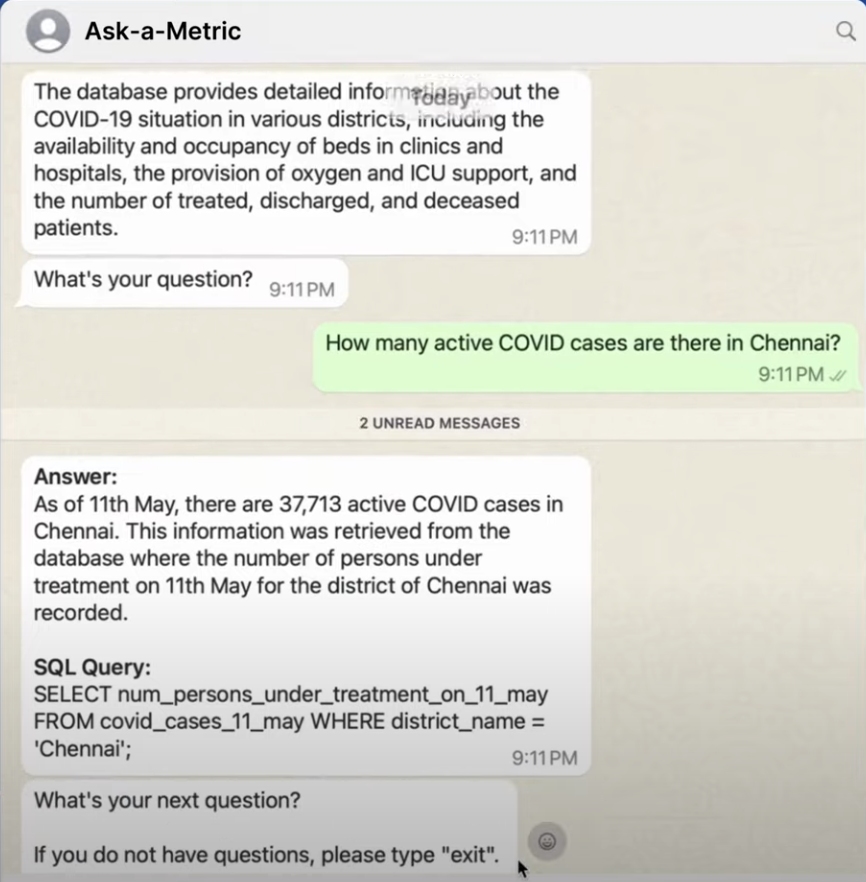

A data analyst chatbot that answers questions about program indicators

We are partnering with IDinsight to trial their Ask-A-Metric chatbot tool on our program data. The tool will be able to query our database to answer simple analysis questions about our health programs, such as ‘How many children were reported to have symptoms of severe malnutrition in Zanzibar last month?’

Although a well-managed set of dashboards should enable all stakeholders to access the data they need, in practice it is difficult to cater to everyone’s needs when there are several programs, each with a lot of data and multiple groups of stakeholders. As a result, anyone who needs data that can’t be found on a dashboard must find and ask a data analyst to query the database to extract the data. This causes delays as well as being a source of interruption to technical staff. A chatbot that performs the basic tasks of a data analyst will enable data users to immediately obtain the data they need and free up time for technical staff to focus on the complex tasks that a chatbot cannot perform.

An AI assistant to support CHW supervisors to monitor and manage the performance of their CHWs

We are partnering with Dalberg Data Insights to design a LLM-powered tool that will support CHW supervisors to more efficiently monitor and manage the performance of their CHWs, with funding support from the Bill & Melinda Gates Foundation.

In theory, supervisors have access to all the information they need to be able to ensure that their CHWs, and therefore the community health program as a whole, are reaching health service delivery targets. However, in practice, CHW supervisors are extremely busy and have to juggle multiple tasks, of which supervising a team of CHWs is just one. Therefore, we will be exploring whether an AI assistant can reduce the time and effort required by supervisors to effectively manage CHWs.

LLM tools that make our software developers more efficient

D-tree’s software developers are leveraging a LLM-powered tool called Github Copilot to help them write high-quality code more efficiently. The Copilot tool auto-completes code as it’s being written, which cuts down the time that developers have to spend on writing repetitive ‘boilerplate’ code. As a result, our team can dedicate more time to writing and testing complex code, while also engaging with the users of our digital tools to ensure their needs are fully addressed.

ChatGPT helps us to write blogs like this!

We hope you enjoyed reading this blog, which was written by a human and thoughtfully edited with the help of ChatGPT.